This is the first part in a series of posts that cover how to download and analyze SEC filings. In this post I will show how to retrieve the URLs for the filings you want from the SEC server. Most advice floating around today involves using the now discontinued SEC ftp servers. So at the very least it’s time for an update. The code that follows is in Python 2.7. The snippets are not ready to run out of context, but I’ve included a more production-ready version for downloading. I make no guarantees on the code being correct, or even good.

The structure of the SEC index files stored on their EDGAR server is as follows.

Year > Quarter > File type

For example, the directory structure for 2002 is

2002

- QTR1

- company.gz

- form.gz

- master.gz

- company.idx

- form.idx

- master.idx

- QTR2

- QTR3

- QTR4

There are more files here but I’ve only included ones relevant for downloading company filings. The .idx files are index files that have the information we want and the .gz files are compressed versions of those. We’ll be downloading en masse these compressed files.

Our starting point will be the form.idx files. Each form.idx file has information on the filing form type, filing date, company name, company CIK (central index key) number, and the URL for downloading that specific filing from the EDGAR servers. We’ll eventually want to compile a list of all these URLs.

The process has five main steps:

- Download the form.gz files for all year-quarters.

- Unzip the form.gz files.

- Extract the rows with only the, say, 10-K files (10-K, 10-K/A, 10-K405, 10-K405/A, and 10-KT).

- Since the form.idx files are in fixed width format, convert these to tab-delimited file formats.

- Last, combine all the submissions data for every year into one big file.

Each form.gz file is on the EDGAR server with a link:

https://www.sec.gov/Archives/edgar/full-index/$Year/$Quarter/form.gz

1. Download the form.gz files for all year-quarters. In python, I loop through all years from 1994 to 2016, using the urlretrieve() function of the urllib library:

for YYYY in range(1994, 2017):

for QTR in range(1,5):

urllib.urlretrieve("https://www.sec.gov/Archives/edgar/full-index/%s/QTR%s/form.gz" % (YYYY, QTR), "%s/sec-downloads/form-%s-QTR%s.gz" %( HOME_DIR, YYYY, QTR))

The pattern above for looping through each year-quarter will be repeated often in the code that follows.

2. Unzip each form.gz file. I use the gzip library.

for YYYY in range(1994, 2017):

for QTR in range(1,5):

path_to_file = "%s/sec-downloads/form-%s-QTR%s.gz" % (HOME_DIR, YYYY, QTR)

path_to_destination = "%s/sec-company-index-files/form-%s-QTR%s" % (HOME_DIR, YYYY, QTR)

with gzip.open(path_to_file, 'rb') as infile:

with open(path_to_destination, 'wb') as outfile:

for line in infile:

outfile.write(line)

3. Extract lines in the form.gz file that indicate the associated form is a 10-K. I use a simple regular expression in re.search().

for YYYY in range(1994, 2017):

for QTR in range(1,5):

outlines = []

with open("%s/sec-company-index-files/form-%s-QTR%s" % (HOME_DIR, YYYY, QTR), 'r') as infile:

for line in infile:

if re.search(r'^10-K', line):

outlines.append(line)

with open("%s/sec-company-index-files-combined/form-%s-10ks.txt" % (HOME_DIR, YYYY),'w') as outfile:

outfile.writelines(outlines)

4. Convert the form.idx to tab-delimited files. To download the actual 10-K we will need to prepend https://www.sec.gov/Archives to the “url” field.

header = '\t'.join(['formtype', 'companyname', 'cik','filingdate','url']) + '\n'

url_base = "https://www.sec.gov/Archives/"

for YYYY in range(1994, 2017):

outline_list = []

with open("%s/sec-company-index-files-combined/form-%s-10ks.txt" % (HOME_DIR, YYYY), "r") as infile:

for currentLine in infile:

currentLine = currentLine.split()

n = len(currentLine)

formType = currentLine[0]

filingURL = currentLine[-1]

filingDate = currentLine[n-2]

CIK = currentLine[n-3]

companyName = ' '.join(currentLine[1:(n-4)])

outLine = '\t'.join([formType, companyName, CIK, filingDate, url_base + filingURL]) + '\n'

outline_list.append(outLine)

with open("%s/output/form-%s-10ks-tdf.txt" % (HOME_DIR, YYYY), "w") as outfile:

outfile.write(header)

outfile.writelines(outline_list)

5. Combine all the submissions data for every year into one big file.

with open("%s/all-10k-submissions.txt" % HOME_DIR,'w') as outfile:

outfile.write(header)

with open("%s/all-10k-submissions.txt" % HOME_DIR,'a') as outfile:

for YYYY in range(1994, 2017):

with open("%s/output/form-%s-10ks-tdf.txt" % (HOME_DIR, YYYY), 'r') as infile:

outfile.writelines(infile.readlines()[1:])

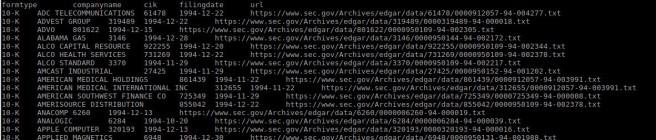

The output file will look like Figure 1 below.

Finally, we’ll get just the URLs for each 10-K, which we will request from the EDGAR server.

url_index = 4

outlines = []

with open("%s/all-10k-submissions.txt" % HOME_DIR, 'r') as infile:

for line in infile:

line = line.strip().split('\t')

url = line[url_index]

outlines.append(url + '\n')

with open("%s/%s" % (HOME_DIR, outfilename),'w') as outfile:

outfile.writelines(outlines)

The final urls.txt file has 233,135 10-K URLs on EDGAR. In the next post I will show a method for downloading these files quickly and efficiently. Figure 2 below shows trends of the number of 10-K filed by filing year. We can see the oft cited (e.g. here, here, and here) downward trend in recent years.

For curiosity’s sake, the top 10 10-K filers are shown in Figure 3 below.

The full code is here.